User Tools

Sidebar

Table of Contents

Quick Start to PLEAK

Managing Models

- Models can be created and organized in the My Models tab

- Models can be imported by first creating a new model (with the desired name for the model to be imported) and then importing the desired file when opening the new model

- Models can be exported as *.bpmn files from the burger menu

- Exported models have all the Pleak specific information (e.g. scripts and data specifications) included

- Clicking on the model name opens the BPMN modeler

- Pleak editors (for adding analyzer specific information and accessing the analyzers) can be opened from the burger menu in the model line

- Models can be shared with other Pleak users using Share option

- Models shared with the user appear under the Shared models tab

- A public link to the model can be created with the Publish option in the burger menu

- It is possible to run the analysis on the published models but not possible to change the model when accessing it via the public link

- This option can be used to distribute Pleak analysis results

- The user should start from the BPMN modeling tool to create the base model for Pleak, the analyzer specific editors can then be used to add other information (such as privacy technologies or computation scripts) to the model

- A quick overview of Pleak capabilities can be seen in this video. And in this playlist in Youtube

Overall Modeling Guidelines

The focus of Pleak is on analyzing the data processing, hence correctly handling data objects is crucial for the correct analysis. Pleak does not support full BPMN specification and the details of the supported syntax depend on the analyzers, however there are some overall guidelines and conventions used by the analyzers.

- Each task does one action (rule of thumb: if your task description contains and then it may confuse the analyzer)

- Sending or receiving data (receiving is usually done with message catch event)

- Protect or Open step for some privacy technology

- Data processing

- Each task should have its input and output data clearly marked with incoming and outgoing data associations respectively

- For most analyzers only data processing tasks that have some inputs and output(s) are meaningful, the main exceptions are the sending tasks and for BPMN leaks-when the task before the exclusive gateway

- For message flows sending data we assume that the sending task has as input the data that is sent over the network and the receiving task or message catch event has the same data as output

- Sending task should have no output

- Data processing and sending tasks need to be separated

- All data communication between pools should occur via the message flows as specified before

- In short, all communication should be as generalized in this Pleak model

- One pool should have one process (e.g. one start event)

- For analyzers supporting branching the default flow should be marked

- If two data objects have the same name then they are considered to be the same data by the analyzers

- Each starting parallel gateway should be concluded with an end parallel gateway

- Some analyzers may not support spaces in the model names. Including the (copy) word added when you copy a model.

- Pools, lanes and data objects should have unique names

- Avoid starting data object names with the word “entity” since it may cause errors when parsing the xml of the model file.

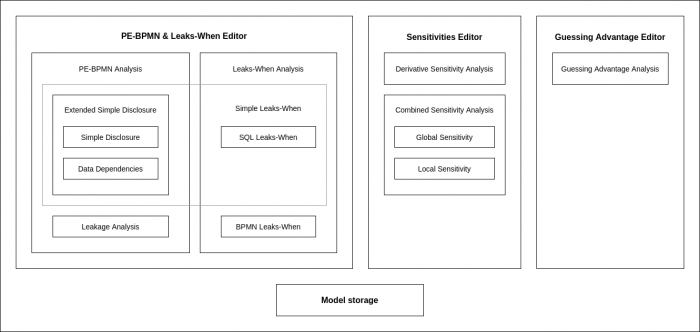

Pleak Editors and Analyzers

Choosing Appropriate Analysis

Pleak offers a range of analysis capabilities that each come with their own benefits as well as restrictions. Overall, the user can start with the visibility analysis offered by the disclosure tables that is available also for plain BPMN models. Then depending on the process it can either be enhanced with PETs (PE-BPMN stereotypes) or the operations of the tasks can be specified with the computation script. Specifying the computations allows qualitative leakage analysis with the leaks-when analyzers. Leaks-When analyzers summarize the data processing to highlight dependencies between generated data objects and the inputs of the process. Finally, if something is also known about the input data and the workflow computes an aggregation (can be also an intermediate step for guessing advantage with collaboration models) then sensitivity analyzers can be used to quantify the leakage.

The following table summarizes the analyzers, for more details see the page for the analyzer of interest.

| Simple and Extended Disclosure | Leakage Detection | BPMN Leaks-When | SQL Leaks-When | Global Sensitivity | Combined Sensitivity | Guessing Advantage | |

|---|---|---|---|---|---|---|---|

| Model Type | Collaboration (multiple pools allowed) | Data processing workflow (single process) | Data processing workflow or Collaboration | ||||

| Model restrictions | Only meaningful for multiple pools | Reasonable for models with branching | One start event only (over all the pools) | No branching | No branching | No branching, Final query has a numeric output (aggregation query) | |

| PETs support | All stereotypes | Secret sharing, Encryption | Encryption, Secure Channel | Partial support thorugh extended disclosure report integration | Differential privacy | Differential privacy | Differential privacy |

| Script language | - | - | Pseudocode | Postgre SQL | Postgre SQL | Postgre SQL | Postgre SQL |

| Input data | - | - | - | - | - | Required | Required |

| Other possible inputs | - | - | - | Data sharing policy | - | Attacker's prior knowledge about the data | |