User Tools

Sidebar

Table of Contents

SQL combined sensitivity analyser

SQL combined sensitivity analyser has a a user-facing frontend application that allows to extend models by attaching SQL scripts to its elements. SQL queries are added to tasks and SQL database information added to data objects. SQL information is attached by adding specific labels into the XML code of the model. The editor uses SQL combined sensitivity analysis tool to perform an analyze on the extended model to combine and present the results. Editor and analysis tool have separate codebases, but they are both required to use the full functionality of the analyser. Communication between the two components is arranged by backend REST service.

Description

Sensitivity of a function is the expected maximum change in the output, given a change in the input of the function. Sensitivity is the basis for calibrating the amount of noise to be added to prevent leakages on statistical database queries using a differential privacy mechanism. The analyzer computes sensitivity of an SQL workflow and reports the amount of noise that we need to tolerate to achieve ε-differential privacy for desired ε.

Quick guide

SQL combined sensitivity analyzer is accessible through Actions menu (with burger-menu icon) under each model in own and shared models/folders lists of frontend - link “Open in SQL CS editor”. Different capabilities of the analyzer are also demonstrated on a particular example model.

Adding SQL scripts

Clicking on a task opens two window tabs on the right side of the page (in sidebar): Output table schema and Output table query.

The tab Output table schema expects schema of the table that comes out as the result of computation in the given task. The schema description comes in SQL language in form of a CREATE TABLE statement.

CREATE TABLE t ( var_1 type_1, ... var_n type_n );

The analyzer supports all data types that the underlying PSQL engine recognizes. However, only those starting with “int” and “float” are interpreted as numerical. All the other data types are treated as strings.

The tab Output table query expects an SQL SELECT-statement as an input. The output of this statement should be compatible with the schema described in Output table schema. As the goal of CS analyzer is to ensure differential privacy by adding noise to the final output of an SQL workflow, the last task (the one followed by a end event) should return a numerical value, i.e the output of that task should be an aggregation. Grouping by a table attribute (but not a complex expression) is allowed. The analyzer expects that the group columns come first, and the aggregation is the last one. Currently supported aggregations are COUNT, SUM, MIN, MAX, and only one aggregation per task is allowed.

SELECT

query_expression

FROM

table_1 AS alias_1,

...

table_n AS alias_n

WHERE

condition

;

All the intermediate tasks (the ones not followed by a end event) may as well contain SELECT-statements without aggregations.

Adding table data

Clicking on a data object opens several window tabs on the right side of the page (in sidebar) for entering table schema, contents, and norm information.

Table schema

The window tab Table schema contains a schema description in SQL language in form of a CREATE TABLE statement, similarly to Output table schema of a task object.

CREATE TABLE t ( var_1 type_1, ... var_n type_n );

Table data

The window tab Table data initially contains an empty table object where rows are labeled with numbers and columns with letters. More rows and columns can be added or deleted by right clicking on the table and choosing appropriate action from the menu.

The labels are immutable. Hence, the actual table column headings should be inserted into the first editable row. Use the same attribute names that are defined in the table schema.

| A | B | C | |

|---|---|---|---|

| 1 | var_1 | var_2 | var_3 |

| 2 | x11 | x21 | x31 |

| 3 | x12 | x22 | x32 |

| 4 | x13 | x23 | x33 |

Table norm

The goal of differential privacy is to conceal the fact that a table has been modified by a unit change, e.g. adding or removing one row. The window tab Table norm tells what this unit is.

The simplest way is to tell which rows and which columns are sensitive, so DP will take care of changes in these particular cells.

rows: i_1 i_2 ... i_n ; cols: attr_1 attr_2 ... attr_n ;

While columns are defined by the names of corresponding attributes, the rows are indexed by the order they are present in the data table, starting from 0. If all rows or columns are sensitive, the keyword all can be used instead of listing all of them. If no rows or columns are sensitive, use the keyword none.

For int and float data, the default distance is defined as the numeric difference in value of the corresponding cells. For text and bool data, the distance between two cells is 1 iff they contain different data. The distance between two tables is the sum of differences of all their cells.

It is possible to define more complicated distances for tables, e.g. scaling them, so that one attribute is considered more sensitive than the other one. The details can be found at analyser advanced settings.

Running analysis

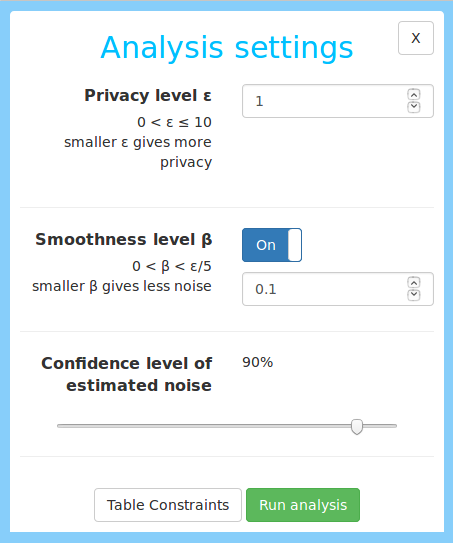

Clicking on Analyze button opens a menu entitled Analysis settings on the right side of the page (in sidebar). There you can adjust “Privacy level ε” and “Smoothness level β” parameters. Simply put, smaller ε means more privacy. For simplicity, set β radio button to off, as it does not affect privacy and is needed for noise optimization.

The slider Confidence level of estimated noise, which ranges between 0% and 100%, fixes the probability with which the noise stays below reported bound. The larger is confidence, the larger is the noise. For 100% confidence, the noise would be infinite, as the noise distribution is unbounded.

Sub-button Table constraints allows to add information that may be helpful for getting better noise level. The details are given in analyser advanced settings.

Click on Run analysis button to run analysis. The results (entitled Analysis results) appear in the sidebar as well. The result is given for each of the input tables, and it consists of the following components.

- beta-smooth sensitivity is the β-smooth sensitivity of the query w.r.t. the distance defined in the table norm.

- actual outputs y are the true outputs of the query, without noise.

- p%-noise magnitude a is the additive noise magnitude, i.e. the noise stays below this quantity with probability p%.

- p%-realtive error |a|/|y| is the quotient of the additive noise and the query output. If there are several outputs, it is the quotient of corresponding vector norms.

Clicking View more provides more information. It tells which value of β was finally used (which can be important if β was optimized automatically), what is the underlying norm, and how much noise we would get using Laplace noise distribution (by default, the noise level is given for Cauchy distribution).

Source code

The source code of SQL combined sensitivity editor is available at pleak-sql-combined-sensitivity-editor and the source code of SQL combined sensitivity analysis tools at pleak-sql-analysis repositories. Installation details can be found at analyser installation guide.